Yuhao Ge

SDE @ Google | MSCS @ UIUC | ex-SDE @ Amazon AWS | ex-SDE @ TikTok

I am an LLM infrastructure and performance engineer with rare cross-accelerator expertise (TPU, Trainium, GPU). My work spans the full LLM infrastructure stack, from compiler optimization, kernel implementation, to large-scale training and inference systems.

Currently, I am working at Google, where I focus on improving Gemini training and serving performance on TPU, and enhancing third party TPU experience through TorchTPU.

Previously, I worked at Amazon AWS, Annapurna Labs, where I focused on LLM performance optimization on Trainium chips, primarily working on compiler optimization, kernel language design, and kernel optimization.

I was a Master’s student in Computer Science at UIUC, working under Prof. Charith Mendis. My research interests span Machine Learning, Compilers, and LLM Efficiency.

I earned dual B.S. degrees in Computer Engineering from Zhejiang University and the University of Illinois at Urbana-Champaign through their joint program. I also was a visiting research student at the UCLA VAST Lab, under the supervision of Prof. Jason Cong, where I worked on FPGA accelerator design automation. Additionally, I have interned at TikTok and NFTGo.

🎉 news

| Nov 01, 2025 | 🚀 I joined Google as a Software Engineer, working on improving Gemini training and serving performance on TPU. |

|---|---|

| Apr 09, 2025 | Our paper, “SPLAT: A Framework for Optimised GPU Code-Generation for SParse reguLar ATtention”, has been accepted at OOPSLA 2025. |

| Mar 01, 2025 | 🚀 I joined Amazon AWS, Annapurna Labs, as a software engineer. |

| Aug 09, 2024 | I received a Certificate of Appreciation from the Neuron Compiler team at Amazon Annapurna Labs for developing autotuning infrastructure that significantly improved AWS Trainium performance. This project was recognized as one of the most impactful contributions during my internship. |

| May 20, 2024 | I joined Amazon AWS, Annapurna Labs, as a software engineer intern. I am working with the Compiler team on the AWS Neuron, which aims to optimize deep learning training and inference on AWS Trainium and Inferentia chips. |

💼 work

| Google Software Engineer | 2025.11 - Now |

| Amazon, Annapurna Labs Software Engineer | 2025.3 - 2025.11 |

| Amazon, Annapurna Labs Software Engineer Intern | 2024.5 - 2024.8 |

| NFTGo Machine Learning Engineer Intern | 2023.2 - 2023.6 |

| TikTok Software Engineer Intern | 2022.5 - 2022.8 |

🎓 education

| University of Illinois at Urbana-Champaign (UIUC) M.S. in Computer Science | 2023.9 - 2024.12 |

| University of California, Los Angeles (UCLA) Visiting Student Researcher | 2022.5 - 2022.9 |

| University of Illinois at Urbana-Champaign (UIUC) B.S. in Computer Engineering | 2019.9 - 2023.5 |

| Zhejiang University (ZJU) B.S. in Computer Engineering | 2019.9 - 2023.5 |

📝 selected papers

- SPLAT

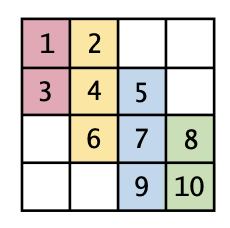

SPLAT: A framework for optimised GPU code-generation for SParse reguLar ATtention (OOPSLA 25’)Ahan Gupta, Yueming Yuan, Devansh Jain, Yuhao Ge, David Aponte, Yanqi Zhou, Charith MendisWe proposed a novel sparse format, ACSR, and a code-generation scheme, SPLAT, to achieve both generality and performance in diverse sparse-MHSA patterns on GPUs, resulting in significant speedups over Triton and TVM.

SPLAT: A framework for optimised GPU code-generation for SParse reguLar ATtention (OOPSLA 25’)Ahan Gupta, Yueming Yuan, Devansh Jain, Yuhao Ge, David Aponte, Yanqi Zhou, Charith MendisWe proposed a novel sparse format, ACSR, and a code-generation scheme, SPLAT, to achieve both generality and performance in diverse sparse-MHSA patterns on GPUs, resulting in significant speedups over Triton and TVM.

🥁 drumming

Drumming has been a consistent passion in my life, helping me maintain creativity and rhythm in everything I do. Check out my videos here, or visit my YouTube and Bilibili channels.